Why Nvidia & Meta CEOs think Quantum Computing is 20 years away

An industry primer on why the economics behind Quantum Computing Sucks

QUANTUM COMPUTING: INDUSTRY PRIMER

1. Google Willow and the Septillion Year Encryption

2. What Jensen Meant by “QC Is 20 Years Away”

3. The Engineering Challenges behind why QC is still 20 years away

4. Is D-Wave Actually A Fraud?

5. Who’s Right? Who Won? You Decide

<- if you're on desktop, navigate chapters with the Table of Contents slider to the left."If you said 15 years for very useful quantum computers, that would probably be on the early side. If you said 30, it's probably on the late side," he said. "But if you picked 20, I think a whole bunch of us would believe it." — Jensen Huang, Nvidia CEO

“I think most people still think that’s (quantum computing) like a decade plus out” — Mark Zuckerberg, Meta CEO

NVIDIA’s CEO Jensen Huang turned heads last week when he claimed that the commercial viability of quantum computing at-scale was still 20 years away — apparently Zuckerberg thinks so too. D-Wave’s CEO, who saw his company’s share price collapse by -40% in the wake of Jensen’s comments, immediately came out firing on all cylinders pushing back against this narrative.

There has been reflexive commentary from multiple reputable publications in both camps since then, such as this Forbes article and the one linked below by Martin Shkreli.

So who’s right? The aim of this article is serve as an industry primer on quantum computing which aims to equip you with the necessary information — so that you can decide for yourself.1

Google Willow and the Septillion Year Encryption

A good place to start this topic would be somewhere most people are already familiar with — Google Willow’s recent quantum computing achievement. A quantum chip with 105 qubits, Willow managed to solve RSA encryption which would’ve ordinarily taken a classical supercomputer 10 septillion years to crack (1024).

But what’s a qubit? And what’s RSA? How are they related to quantum computing? Let’s dive in.

Most of us are already familiar with how classical computing works. At its most fundamental level, semiconductor chips work by having billions of transistor switches either allowing electrical current to flow through them or not. These “on/off” states can be represented as “1’s” or “0’s” in computer language, and when a gazillion of them run simultaneously it can apparently mimic even sentience itself.

Quantum computing turns this fundamental premise of computing on its head. At the subatomic level, weird things start to happen which classical physics cannot explain. A phenomenon called superposition exists, where a qubit (i.e. switch) can somehow represent both “1” and “0” at the same time. The easiest way to visualize this is by imagining that parallel universes exist, and that multiple “on/off” states can simultaneously exist within a single “switch”. This allows compute to scale logarithmically rather than linearly (as in classical computing) with incredible practical applications for business and society.

So what feat did Google Willow manage to achieve? Quantum computing (QC) is best known for its ability to crack RSA encryption, which is the standard form of encryption used everywhere today. Basically, RSA works by multiplying two very large prime numbers into a humungous number; and if you know one of the prime numbers (i.e. key), you can factor the humungous number to derive the other prime number (i.e. code). Most classical supercomputers would take around 1024 years to crack RSA encryption via brute-force, making it a very secure form of cryptography.

However, since quantum computing scales computing horsepower logarithmically rather than linearly, you could use it to discover all possible states in the quantum multiverse simultaneously. This was how Willow was able to crack RSA encryption via brute-force in 5 minutes where a classical supercomputer would’ve taken 1024 years. In essence, it means the beginning of the end for RSA encryption.

While there are plenty of QC applications beyond simply cracking RSA encryption, it gets the most screen time because of the sheer cool factor of saying “septillion” to a public audience. I won’t dive into the math behind it (i.e. Shor’s algorithm), but you can watch the Veritasium video below for a quick primer on the enormity of the task that Willow has achieved:

But this begs the question — if it’s so cool, why did Jensen Huang say that quantum computing at-scale is still 20 years away?

What Jensen Meant by “QC Is 20 Years Away”

One massive problem faced by real-world applications of quantum computing today is something called error correction. To understand why, we first need to understand the engineering behind quantum computing.

To illustrate, let’s start from classical computing. Semiconductors like silicon are called as such because they are literally semi-conductors — depending on the state it’s in, they can either conduct electricity or not. This gives silicon its ability to act as electrical “gates”, which form the basis of the transistor switches they are known for and subsequently their representation as “1’s” or “0’s” in computer language.

Quantum computers take things a step further — they are made from superconductors, which in QC is usually a “transmon”. When superconductors are cooled to absolute zero (0 K), they demonstrate zero resistance and can achieve perfect conductivity — which in thermodynamics just means the lowest possible energy state. This is important in quantum computing because it allows qubits to exist. Why?

Well, apparently when you are a subatomic entity that can simultaneously occupy multiple universes, you phase in and out of existence very rapidly. This is what qubits in superposition are like, they typically exist for only a billionth of a second (10-9) even at absolute zero temperatures. The technical term for this phenomenon is called “coherence”; decoherence occurs when external interference like vibrations or electrical signals bump into the qubit, causing it to collapse. This is obviously a problem when attempting to use qubits as the foundation of quantum computing, since you can’t even get them to exist long enough to measure their states as “1’s” or “0’s”.

As with most impossible things, the solution to this is simply to do much more of it. It was already well-known prior to this that you could use roughly 100 physical qubits (i.e. real) to simulate 1 logical qubit (i.e. synthetic); such that even with a 99% error rate, you’d still at least be able to simulate 1% effectiveness2. What Google Willow was able to prove was that error correction via scalability was feasible — namely that adding more physical qubits decreases the error rate of logical qubits.

This is a big deal since error correction is by far the biggest practical challenge of making QC feasible. And since scaling up the number of physical qubits also increases the lifespan of logical qubits, such scalability translates to exponential gains in making QC feasible.

However, that’s just the tip of the iceberg. Willow was more or less the quantum equivalent of a single transistor switch, and all it proved was that it was possible to make logical gates from superposed qubits, not that it was practical. For reference, there are 16 billion transistors in an iPhone 16 chip, and Willow was the quantum equivalent of only one of them.

This implies that we are still decades away from quantum computing as a general business concern or at industrial scale, outside of perhaps highly specialized scenarios like cracking RSA encryption. If AI’s progress today is where the Internet was in 1997, QC’s progress today is still somewhere in the late-70’s prior to the conception of TCP/IP. All we’ve done so far is prove that it is possible, not that it is economical. We still need to solve all the engineering challenges of quantum computing before it achieves that — actually, why don’t we talk about that right now?

The Engineering Challenges behind why QC is still 20 years away

As aforementioned, quantum computing requires cooling superconductors to absolute zero temperatures to implement. Unlike classical computing, you can’t just plop a chip into a motherboard at room temperature — you actually need to acquire cylinders of liquid nitrogen/helium, and build an enclosure that traps the freezing gas inside it with the chip. Multiply this by the number of quantum chips you plan on using, and you can see how the operational costs becomes exponentially more expensive vs. running a classical supercomputer cluster.

On top of that, that enclosure also needs to create a vacuum within it; and have sufficient shielding from external interference to enable those qubits to exist. Even the tiniest vibration from the bacterial equivalent of a pin drop can cause a qubit to collapse, so you need some sort of mega-dampener to insulate that enclosure from the elements. Again, scaling up such complex infrastructure requirements ramps up the operational costs exponentially vs. classical computing.

Recall also that qubits theoretically phase in and out of existence between multiple universes, and only exist for a billionth of a second when they do. As aforementioned, the solution to this is to use 100 physical qubits to represent one logical/synthetic qubit — which implies needing millions of physical qubits in order to put together what you could colloquially call a “quantum computer”. Google Willow had only 105 of them — think about the costs (and sheer amount of space) involved in preparing 10,000x more qubits for just a single QC computer.

You’d also need all the incremental circuitry to connect all the qubits together, so that they can communicate and behave like computers — as well as all the aforementioned shielding to prevent such communication from collapsing, including interference from each other’s existence. This topic could take up an entire essay in and of itself, but just like the aforementioned examples they are vastly more complex to implement as compared to their classical computing equivalent.

But wait, that’s just hardware! Apparently, multiversal qubits also do not speak the same language as their classical counterparts, and you’d need a translation layer to translate quantum “1’s” and “0’s” into classical form so that they can behave alongside their sidekick computers. This is called Quantum-Classical Integration, and is an entire field in and of itself.

Moreover, the application layer of quantum computing is still practically non-existent today — quantum algorithms exist, but they have yet to outperform classical algorithms. It will take years if not decades before quantum algorithms can outstrip their classical counterparts.

These are far from all the engineering headwinds which quantum computing will have to solve before it can achieve industrial scale, but it gives you a sense of the sheer enormity of the task behind making QC feasible from an economics standpoint vs. classical computing. That should be enough to inform you whether Jensen was right about QC at-scale requiring another 20 years to realize.

Is D-Wave Actually A Fraud?

The rebuttal from the “QC is already here” camp (e.g. D-Wave’s CEO) is that Jensen’s comments were taken out of context. Their position is basically that Jensen was referring to the development of a truly mature QC industry; whereas quantum computing actually does exist in a less significant but still material form today.

Take D-Wave for example. Their website actually goes into extremely in-depth detail explaining what they do, a process called quantum annealing. The short version is that all physical states tend towards entropy, or a minimum energy or rest state. Quantum annealing exploits this phenomenon of qubits naturally seeking a rest state of minimum energy, and uses magnetic waves to invoke quantum entanglement amidst the superposed qubits in order to manufacture a conceptual “switch”. In theory, this method can be used as a quantum computer.

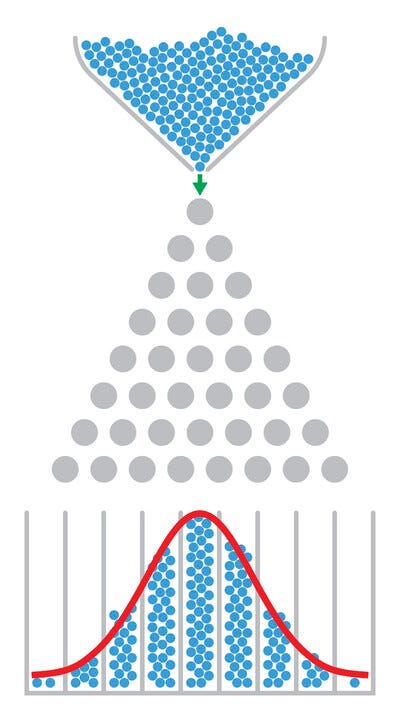

However, quantum annealing isn’t widely considered to be “real” quantum computing — the physics phenomenon described above can only be used to solve optimization problems known as Quadratic Unconstrained Binary Optimization (QUBO) problems. Since most things in reality seek a natural final rest state, you could use quantum annealing to simulate real-life conditions by inputting their criteria into a quantum annealing model and letting the natural outcome play out. This basically makes quantum annealing the quantum version of the Galton Board:

However, this method lacks the “gate-like” characteristics of transistor switches (“1’s” and “0’s”) that allow it to function as a true computer — all it does is simulate optimization models using quantum properties. While this does technically qualify D-Wave’s devices as quantum computers, they are computers only if you’re trying to solve optimization or sampling problems and almost nothing else. For instance, they might be great if you’re trying to run a Monte Carlo simulation; but you can’t really adapt quantum annealing to run say a Word document or even Paint.

I do not want to make D-Wave look like bad guys here, because quantum annealing is a legit quantum process and reading their work has been nothing short of an intellectual adventure. However, the fact remains that their devices do not meet the colloquial definition of “computer” in all but the most technical terms. D-Wave also hasn’t been profitable since their inception in 1999; and in fact has sported an average Net Margin of -600% since 2020 (listed in 2022). I’ll defer to Martin Shkreli for the remainder concerning D-Wave’s long-term viability as a business:

Who’s Right? Who Won? You Decide

As both D-Wave’s CEO and the Forbes article highlighted, there are most certainly real-world applications which could technically qualify as quantum computing already being carried out today. However, “useful” QC as Jensen puts it will indeed likely take 20 years to emerge or become like what the AI sector is today.

It’s important to recognize that QC in its current form is still a frontier technology, more akin to an MVP or Phase 1 FDA drug than something that’s actually ready for retail. However, the opportunity to see something that will change the world (again) while still in such a nascent stage is a rare one — and I’m highly appreciative of bearing witness to humanity’s hockey stick of progress already having something locked and loaded in reserve.

By the way, did you notice that I didn't bring up Schrödinger's cat even once throughout this entire explanation?

Disclaimer: This document does not in any way constitute an offer or solicitation of an offer to buy or sell any investment, security, or commodity discussed herein or of any of the authors. To the best of the authors’ abilities and beliefs, all information contained herein is accurate and reliable. The authors may hold or be short any shares or derivative positions in any company discussed in this document at any time, and may benefit from any change in the valuation of any other companies, securities, or commodities discussed in this document. The content of this document is not intended to constitute individual investment advice, and are merely the personal views of the author which may be subject to change without notice. This is not a recommendation to buy or sell stocks, and readers are advised to consult with their financial advisor before taking any action pertaining to the contents of this document. The information contained in this document may include, or incorporate by reference, forward-looking statements, which would include any statements that are not statements of historical fact. Any or all forward-looking assumptions, expectations, projections, intentions or beliefs about future events may turn out to be wrong. These forward-looking statements can be affected by inaccurate assumptions or by known or unknown risks, uncertainties and other factors, most of which are beyond the authors’ control. Investors should conduct independent due diligence, with assistance from professional financial, legal and tax experts, on all securities, companies, and commodities discussed in this document and develop a stand-alone judgment of the relevant markets prior to making any investment decision.Full disclosure: I’m not a quantum computing engineer. Actual QC engineers are free to excoriate my mistakes in the comments.

I'm making up the numbers here, but it's not that far off.

Given that this is a business primer - a good one! - and not a rigorous technical one - but few corrections.

First, superconductors do not require absolute zero, for tin - 3.7 Kelvins, lead - 7.2. Liquid helium is at 4.2 Kelvins and is used to get metals go to superconducting state. Temperatures as close to absolute zero as possible needed to isolate qubits from external interactions but not to create superconducting effect.

More serious statement though - QC applications. The main challenge comes from the fact that while QC operates on millions or billions numbers simultaneously, the final result of the computation is a single number - collapsing all those qubit states. Shor’s genius was in finding an algorithm where this number would be useful - in finding large primes. There is a handful of applications (Grover’s search) that benefit from QC nature but to claim that there are multiple QC algorithms is misleading and is expected on a grant proposal 🤘

Thanks